i = '10' // i is "10"

i = i + 1 // i is now "101"

i = i - 1 // i is now 100

i = i + 1 // i is now 101

i = '10' // i is "10"

i = i + 1 // i is now "101"

i = i - 1 // i is now 100

i = i + 1 // i is now 101

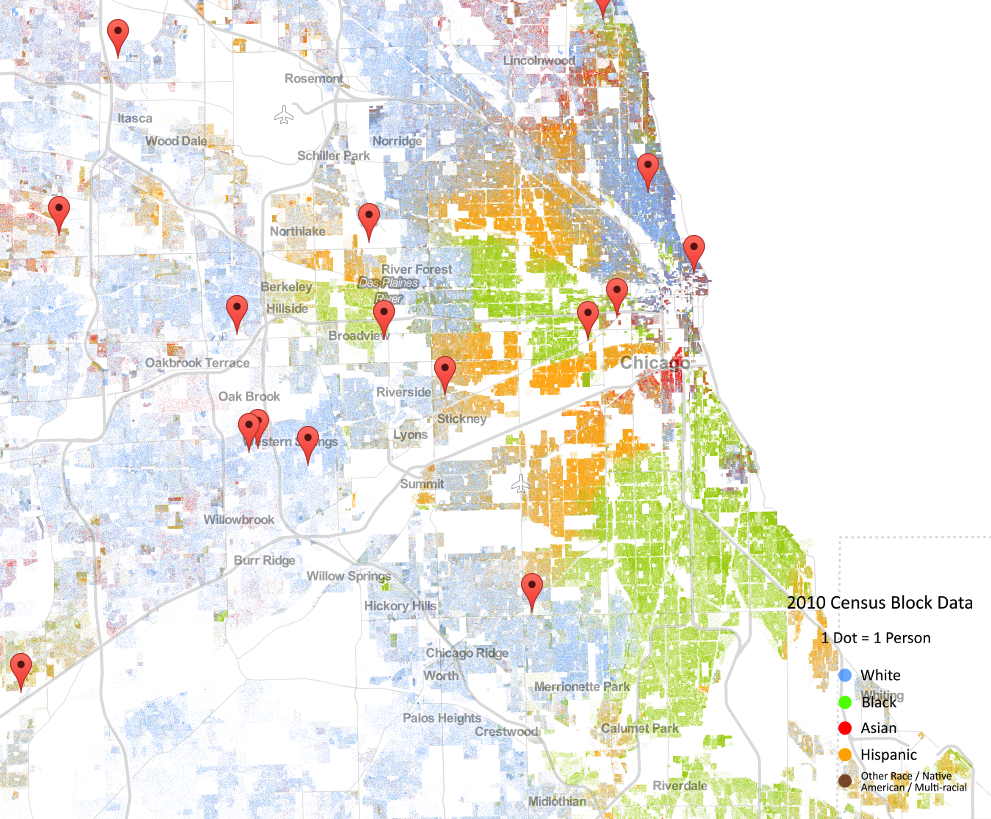

A map released by the Weldon Cooper Center for Public Service at the University of Virgina has been making the rounds online because of the sobering reality in shows. This reality is that in many cities, most starkly Chicago, the grouping of people by race are sharply distinctive, hinting that segregation is still very much alive.

I decided to take this map a step further and plot trauma centers (both adult and child, all levels) that are verified by the American College of Surgeons. In Chicago, you can see that heavily Hispanic and African-American areas are devoid of adult trauma centers. This is an interesting phenomenon to study over the rest of the United States. Hopefully further research will continue to explore this.

The data was drawn from the American College of Surgeons (here), parsed, and then rendered using Google Maps API on top of the original Cooper Center map. Additional information from the Illinois Department of Public Health was used as well (here).

I’ve been telling myself during this primary season that “it’ll be okay.” That the primaries are always generally a little more radical early on to appeal to the bases of each party, but ultimately candidates come back to the center in order to have a more moderate appeal in the general election. I really believe this is important not just because of the necessity to win independent votes in the general election, but most importantly because becoming more moderate strikes a conciliatory tone that can unite the country. Unfortunately, both political parties don’t seem to be moving this direction, making the opportunity for a 3rd option candidate potentially the most significant in many elections.

For the GOP, less than a week until the first Iowa primary finds us with Donald Trump continuing to extend his lead, now capturing 41% of GOP support according to recent polls, with his nearest competitor Cruz capturing just 19%. The sensible candidate, Jeb Bush, is being crushed. A great opinion piece by David Axelrod describes a theory that I believe is evident on both sides;

Open-seat presidential elections are shaped by perceptions of the style and personality of the outgoing incumbent. Voters rarely seek the replica of what they have. They almost always seek the remedy, the candidate who has the personal qualities the public finds lacking in the departing executive.

Poor Jeb Bush seems to share these qualities, and the loudest parts of the GOP have little interest in supporting a candidate that bares any resemblance to President Obama. At the same time, the style of Trump and Cruz are polar opposite to that of Obama, a possible explanation for their continued success and high polling in the face of (amazingly) open opposition from the GOP establishment (e.g. the now infamous National Review piece “Against Trump”). Normally I’d chuckle at the state of the GOP, as I believe spending the Obama Presidency scaring the sh*t out of their base to (successfully) gain majorities in the House and Senate has given birth to the dire predicament the party finds itself in now. Ironically, the most important “taking back” tagline leading up to Iowa is the GOP establishment “taking back” their party from the fringe who have thoroughly hijacked it during this primary season.

I said I’d normally chuckle, but the Democrats don’t seem to be moving in a more moderate direction either. While, in the context of David Axelrod’s theory, the GOP is looking for the opposite of Obama as a man, the Democrats seem to be looking for someone politically left of Obama (the same guy who pursued health care reform, presided over the legalization of gay rights, and has publicly spoken about climate change). While Hillary Clinton seems to be the common sense candidate, the Democratic primaries have seen the emergence of democratic socialist Bernie Sanders who has pushed the party even further to the left. Combined with what I believe is an uneasiness with the establishment version of a Democrat (e.g. coziness with big banks), Clinton has had to double down on more progressive policies to try and unite a left wing that sees an opportunity with Sanders to compromise less and do more. Certainly not as dire as the GOP situation, but the narrative still has been driven further from the center instead of toward it.

If both parties seem to be moving away from center instead of towards it, who can unite the country in a general election? The answer might be a legitimate 3rd party candidacy not seen since Ross Perot. Who specifically? Michael Bloomberg. Bloomberg is interesting as he already has name recognition, has deep pockets to properly support his own candidacy, and has been outspoken on issues that both sides of the aisle can get behind. The right would love his economics, and the left would love his social policies (pro-choice, pro gay-rights, pro gun control). If we reach the general election with the Democrats and the GOP each producing their more radical candidates, each bruised and battered from a primary season that may have pushed them further left or right of sensibility, then the opportunity might be there for a uniting 3rd party candidate that can capture the support of independents as well as the disenfranchised establishments of both parties.

If both parties seem to be moving away from center instead of towards it, who can unite the country in a general election? The answer might be a legitimate 3rd party candidacy not seen since Ross Perot. Who specifically? Michael Bloomberg. Bloomberg is interesting as he already has name recognition, has deep pockets to properly support his own candidacy, and has been outspoken on issues that both sides of the aisle can get behind. The right would love his economics, and the left would love his social policies (pro-choice, pro gay-rights, pro gun control). If we reach the general election with the Democrats and the GOP each producing their more radical candidates, each bruised and battered from a primary season that may have pushed them further left or right of sensibility, then the opportunity might be there for a uniting 3rd party candidate that can capture the support of independents as well as the disenfranchised establishments of both parties.

There is a big change that computing is going to undergo in the next 5 – 10 years that will most likely fundamentally transform our world as we know it. Nature shows us that rapid change can be dangerous for a species as well as existing ecosystems, so I hope we are fully cognizant of the full implications to some of the ground breaking research we are conducting. I’ve always felt in research that the most important question isn’t whether we can do something, but rather whether we should. How strange it is that these questions usually reserved for doctors and biologists are today confronting Computer Scientists.

The next decade is going to see huge advancements in different types of machines and algorithms that will have an immediate and huge impact. A quantum computer doesn’t feel too far off, yet has computing potential many orders greater than any super computer we have today. Why does this matter? Well for example, one of the more popular security algorithms, RSA, relies on the fact that prime factorization of large numbers is (still) essentially impossible today. Therefore, a public key that is a product of two primes and a private key of the primes used to encrypt a message is relatively secure today. However, a sudden jump in computing power could have far reaching implications for RSA and many other security algorithms.

In addition to the machine itself are new and emerging algorithms that attempt to not just exist as a recipe for computing a result, but dynamically adjust (“learn”) over time to varied inputs and input environments. Machine learning methods such as neural networks have an inherent ability to in effect learn and evolve based on exposure to different experiences. Neural networks specifically mimic the biology of a brain, with nodes able to exchange information between each other just like neurons in our own brain. The applications are innumerable, including everything from speech recognition to image analysis.

So what is the “crossing of the rubicon” in this context? It is the fundamental adjustment to how we think about and approach problem solving. The impetus for this came from a discussion about process scheduling in an OS; how a scheduler that uses preemptive scheduling switches contexts (processes) every quantum time period. To aid responsiveness, processes that are interactive with the user are prioritized in a series of queues. As a process ages in the priority queue, its priority weight may be adjusted to push it further up the queue until it is executed. However, there are 2 fundamental assumptions that are made. 1) We can’t know the burst time for a process in the queue and 2) our quantum length is static. Additionally, while we “learn” on a very elementary level over time about the weight of different processes, this information and the process table itself are volatile. All this information is lost each power cycle.

When I was looking at this material, the immediate thought that came to my head was “why can’t we make this better with machine learning?” Why can’t we create a scheduler that learns over time the nature of a process (e.g. burst time in ms) in order to appropriately prioritize the queue for optimized completion time? The static quantum length time is a trade off based on minimizing the quantum length time up until a reasonable context switch overhead. If we know the processes and have a model for how they behave given environmental variables (CPU load, memory usage etc), why couldn’t we dynamically adjust this quantum length to even further optimize the completion time? This would be something incredible, as the scheduler for your computers OS would literally be learning your usage habits and be personalized / optimized for you. Truly a ‘personal computer.’

While this specific example is the impetus for this post, it is a broader example of the way of thinking that I think is going to be crucial to not only pushing the field forward, but also preparing us for the coming transition. While a lot of people much smarter than myself are very divided over the implications of AI and similar technology in society, approaching problem solving from the context of these new capabilities is crucial to understanding the potential (good and bad) of these emerging technologies. I’m really new to the machine learning train, but have already felt its possibilities intertwine with how I read and digest material in courses as well as solve different problems. With that, I encourage you to cross the rubicon as well so you can be on the bleeding edge of potentially the largest computing revolution since the transistor.

** The following is a quick debugging tutorial I wrote for the CS 180 java class at Purdue. I thought it might be useful to some so I decided to share it.**

IntelliJ has a great debugging tool that allows you to closely look at how your program executes step-by-step, as well as how methods and variables interact and change over time. Take a look at their tutorial for getting started with the debugger. This will give you the basic skill-set to debug your programs effectively as well as access to some additional documentation that might be helpful.

This includes, but is not limited to:

That’s the basics of debugging. The following is a more CS180-relative debugging guide based around the 3 main issues you’ll face when programming; compile time errors, runtime errors, and semantic errors.

Quick Note – Syntax vs Semantic: Syntax errors are usually caught at compile time, and have to do with violating rules of the java language which dictate how things should be written (closing all curly brackets for example). Semantic errors usually come from issues with the logic of your program and manifest themselves at runtime, either causing your program to crash or provide the wrong output (both cases which we’ll see).

The following material is supplementary to the above material, so it is a good idea to review the material above before diving in as we’ll cover how to interpret the stack trace and then understand the errors using IntelliJs debugger. Feel free to have IntelliJ open in parallel to walk through these debugging examples.

Compile time errors:

These are the errors that don’t even let your program get off the ground. A common example of this:

Error: (15, 13) java: /User/Directory/Program.java:15: cannot find symbol symbol : class Scanner location: class Program

The compiler is giving you some great information here. Namely, there is something happening at line 15, 13 spaces over. In IntelliJ, press Command/ Ctrl + L and type in 15:13. This will take you to the location of the issue. What else does the stack trace tell us?

Symbol errors aren’t just reserved for forgetting to import a Class. They can also come from uninitialized variables;

Error: (15, 13) java: /User/Directory/Program.java:15: cannot find symbol symbol : variable var location: class Program

Run time errors:

Run time errors occur during your program execution, the two most famous being “NullPointerException” and “IndexOutOfBoundsException.” For example, if I was trying to sum a jagged array like this:

int[][] matrix = { {1, 2, 3, 4}, {5, 6}, }; // declare sum variable int sum = 0; // Compute sum for(int i = 0; i < matrix.length; i++) { for(int j = 0; j < matrix.length; j++) sum += matrix[i][j]; }

The compiler wouldn’t stop us from trying to run this code as it is syntactically correct. However, my program would crash at some point. Here is what the stack trace would tell me:

Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: 2 at Matrix.main(Matrix.java:24) ...

Remember how we could find the line number of the error for compile time errors? Well we can do the same with run time errors as well. Something happened at line 24 to cause my Matrix.java program to crash. If eyeballing line 24 doesn’t immediately give away what the issue is, we can use IntelliJs debugging tools to find the issue.

There are 3 main debugging operations you’ll want to be familiar with:

Returning to our Matrix example, since something bad is happening at line 24, I am going to set a breakpoint there. When in debugging mode, this tells IntelliJ to stop just before this line is executed. To set a breakpoint, click to the right of the line number that you want to set the breakpoint at. IntelliJ will place a red circle there and highlight the line in dark red to indicate that a breakpoint has been set.

Now we are ready to debug. To do so, you can click the image of a bug in the top right that is next to the play button that you usually press to run your program.

Now you’ll have a footer in IntelliJ that looks something like this:

Take a few seconds to get familiar with this window. In frames, it tells us the method (main()) that we are currently in. Next to this, there is a list of variables and their values. As we “step” through the program execution, these values will update – allowing us to pinpoint the precise values for which our program breaks.

When we “step” through our program we are basically giving IntelliJ permission to continue running until it hits our breakpoint again. The line that is about to be executed is highlighted in blue.

So with that, let’s step through our program by clicking the “Step into” button:

After one click, you will notice that the value for sum has changed. Another click and you’ll see the value for ‘j’ has changed. We are inside the nested for loop, and each time we step we are letting IntelliJ update our sum variable. Keep clicking “Step” until one of your variables gives you an error:

What does this tell us? It tells us that matrix[i][j] produced an IndexOutOfBoundsException when i = 1 and j = 2. Does the index matrix[i][j] not exist? Let’s set some Watches and run through the execution again.

Let’s set watches for i (the row we are on), j (the column we are in), the matrix length, and the matrix width. To do so, click the plus sign and type the variable names you want to watch + enter.

Alright, now let’s click ‘Rerun Matrix’ in the top left of the Debugging footer to restart the execution.

Now, “step” through the execution again until you receive the IndexOutOfBoundsException error for one of your variables. Ignore the warning in Watches about not knowing what ‘j’ is, this is because you are not inside the inner for loop anymore so ‘j’ is out of scope.

Here is what we should have for Watches:

Remembering the Arrays lecture from week 5, we should notice a problem immediately. We have an array of i rows and j columns. Matrix.length gives us the number of rows in the matrix, but the row at matrix[i] only has 2 columns (matrix[i].length). Since the index of an array starts at 0, an array of length 2 only has indices 0 and 1. Yet, we are trying to access matrix[1][2] which does not exist. How did j get outside the number of columns? Looking at our inner for loop again, we see the problem.

for(int j = 0; j < matrix.length; j++)

Our j is bounded by the length of the matrix (number of rows), not the width of the specific row we are looking at, as given by matrix[i].length. Now, lets change that inner for loop to:

for(int j = 0; j < matrix[i].length; j++)

and Rerun our program through the debugger again. This time, we should get all the way through our loops and if we click “Console” at the top of the debugging footer, we will see a sum of 100.

For more practice, plus if you’re struggling with the “this” keyword, play around with the debugger in IntelliJ with the following code.

public class InstanceVar { public int key = 5; public InstanceVar(int key) { this.key = key; } public static void main(String[] args) { InstanceVar a = new InstanceVar(3); System.out.println(a.key); } }

Set breakpoints at lines 9 and 10 (InstanceVar creation and the print statement). Add Watches for “this.key” and “key.” Follow the blue “about to be executed” line and watch how this.key changes as the lines are executed. Take special note of this step just before we set this.key = key:

this.key already has a value of 5 derived from the instance variable declared before the constructor (line 2). When we pass a key value of 3 to the constructor, “step” forward and you will see that we are merely updating the key value for this instance of the class. Each additional InstanceVar object you create will each has its own value for key, depending on what you pass to the constructor.

Semantic Errors

The final frustrating case that can be aided by IntelliJs debugging tools is the case of semantic issues with your code. Your code compiles fine, doesn’t crash, yet the output isn’t what it should be. Two prominent examples of this are accidental integer division and incorrect String comparison. Luckily, IntelliJ can help.

Consider the nthRoot(double value, int root) method from Lab05 MathTools. Many students had an issue where their method would always produce 0. Often, it was because of this:

x_k_1 = (1/root) * (((root -1) * x_k) + ....;

With integer division, (1 / root) will always resolve to 0 as root is itself an Integer. By setting breakpoints and Watching certain variables, we can pin point this quicker and less painfully. For some practice, pull up your Lab05 code, change your x_k_1 formula to 1/ root, set a breakpoint where this formula is calculated, and step through the execution. To make things simpler, add a main method with a simple call to nThRoot like this:

public static void main(String[] args) { System.out.println("3rd root " + nthRoot(125.0, 3)); }

The other big semantic issue that we often see is improper String comparison. In Java, Strings are objects not primitive types. Therefore, you can’t use logic comparisons on them ( == for example). All the Objects you declare and initialize have a reference in memory. To see an Objects reference, you can just print it. For example:

ClassName one = new ClassName(); ClassName two = new ClassName(); System.out.println(one + " " + two); System.out.println(one == two);

You should see something like:

ClassName@457471e0 ClassName@7ecec0c5 false

When you use the == operator on two objects, you are comparing their reference values in memory NOT any value they hold. Change the 2nd print statement to

System.out.println(one == one);

This will return true, as you’re comparing the same reference address to itself. In java Strings are a special kind of Object that, although it behaves differently, still has a reference address behind it. While this is easy to see for arbitrary objects, people (myself included) often forget about this nuance with Strings and try to get away with something like:

String name = new String("Purdue"); String other = new String("Purdue"); if(name == other) // this is false System.out.println("Same name.");

Debugging this can be difficult if you’ve forgotten how Strings work, which is where IntelliJ comes in. If you set a breakpoint at the if-statement and a set a Watch for “name == other,” you’ll be able to quickly realize that there’s something wrong with your logic. In your variables panel, you can see the reference value that each String has in curly braces {String@600} , {String@601}. In your Watch panel you’ll see “name == other” resolve to false.

Extra Knowledge: There is a difference between String objects and String literals. Change your String declarations to this;

String name = "Purdue"; String other = "Purdue;

and Rerun your program with debugger. If you look at the variables panel you’ll see the reference value in curly braces will be the same, and your “name == other” Watches expression will be true. It’s complicated, but the difference is this;

For extra insight, watch how the JVM handles a situation where the actual value of a String literal changes during the execution. Run the following and set a breakpoint at the line “name = “IU”;”

String name = "Purdue"; String other = "Purdue"; name = "IU"; if(name == other) System.out.println("Both Purdue");

Before the line name = “IU”, JVM has given name and other the same reference address. However, during execution JVM realizes that the value for name is changing, so “name” will then get its own reference address.

Conclusion:

While the last example was a very advanced topic for this class, it’s a case where using breakpoints and setting Watches allows you to see intimately “under the hood” as your program executes. If you aren’t quite understanding how your program is actually working, using the debugger in IntelliJ is a great way to not only find bugs, but also deepen your understanding of the intricacies of Java. Additionally, it’s great for final exam preparation.

It was shocking yesterday that John Boehner announced that he will resign not only from his role as Speaker of the House of Representatives, but also is elected seat as well. While the sympathy shouldn’t go on for too long, he’ll surely land a very well-paying job as a lobbyist in Washington, you do have to feel for him to an extent. Here was a man that was truly stuck between a rock and a hard place, and decided to fall on the sword if it meant preserving the Republican Party as well as the institution.

Through his tenure as Speaker, Boehner negotiated constantly with not only the Democrats and the Obama administration, but also the growing fringe of his own party. Not only this, but often those same members would immediately go back on their word and went to extreme lengths (government shutdowns) in order to try to get their way, even when it was clearly not probably. Eric Cantor, with whom Boehner often clashed heavily with, put it best in a quote that appeared in an article on The Hill;

I have never heard of a football team that won by throwing only Hail Mary passes, yet that is what is being demanded of Republican leaders today. Victory on the field is more often a result of three yards and a cloud of dust,”

It seems as though Boehners resignation of part fatigue from the in-fighting, ahead of another fight with the GOP fringe over defunding Planned Parenthood at all costs, part recognition that having to endure another long and publicly brutal challenge to his leadership would be detrimental to the image of the party, if it could get any worse. In the end, I think it’s great that Boehner finally packed it in. Negotiating with terrorists is never a good thing, especially when they reside in your own camp.

When people see that I proudly (and loudly) rock a BlackBerry Passport in 2015 even though I’m under 25 years old, I always hear the same remarks about apps. “Where are the apps?” “How do you live without SnapChat?” “But you can’t get app ‘x’ on BlackBerry” etc. However, what people don’t understand is that the unsung and subtle feature of BlackBerry is its unrivaled efficiency as a device because of its lack of tangential applications. Sure, I install Android .apks of some popular applications I might need (Instagram and Groupme, which both work fine), but otherwise I have the most productive device on the market today.

It took a change in philosophy of what I wanted my phone to do well to reach this point. I decided that I was going to prioritize calendar, email, phone, and messaging above all else and the BlackBerry 10 OS is clearly geared towards this kind of user. My phone is now a way to stay engaged with those who matter as well as organize and prioritize events and opportunities as they come, not become lost in any of the countless “black hole of time” applications that exist in other app stores. That’s all my BlackBerry does for me, and that’s all I want it to do.

One of the most interesting projects I recently came across was this tutorial on building a 4-node cluster using Raspberry Pis. This is really cool as you can build a small n-node supercomputer of sorts with distributed computing. I’m working with a team that is exploring some pretty heavy image analysis programs on video streams, so this could be a nice concurrent project to help us in our development. I’m going to have a crack at it over the next few weeks and will post how it goes, but the tutorial thankfully looks really thorough.

I always like to offer notes on interesting projects that I work on at Purdue, if not to aid my own reflection then to share ideas, problems, and solutions. For my Systems Programming class, we were asked to implement a memory allocator in C. I had worked on some components of an Operating System before, implementing a system printf function in the XINU operating system, but this was a massive step up in both scope and relevance. I couldn’t wait to dig in.

Obviously, my implementation was going to be nowhere near as thorough and heavily tested as the real thing, but the fundamentals of it were quite revealing. Aside from becoming intimately re-familiarized with manipulating pointers, this biggest thing I came away with has to be just how conceptually simple yet fragile free and malloc are.

The intricacies of the process was at times overwhelming. For allocating, traversing the linked list for an appropriately sized memory block, properly sizing the headers and footers of memory blocks, and splitting and returning excess memory to the free list when necessary was conceptually simple but at times difficult in implementation. Freeing memory and coalising neighboring memory blocks when necessary really underlined just how fragile the methods used for malloc / free, as with every error I became even more sympathetic to those behind all the blue screen of deaths I experienced growing up.

Nonetheless, this project was a lot of fun as it was both challenging and also readily relevant. Certainly, this project contributed greatly to widening the distribution of the timestamps of my GitHub commits, but I can’t wait to see what we cover next.

Foreword: This piece is entirely my opinion. I dual majored in Political Science and Computer Science, and completed my Senior Seminar class + paper on Southern Politics.

I can’t help but draw parallels between the nature of the current GOP primary races and elements of traditional Southern politics back in the days of Democrat dominance in the South. It’s something I constantly find myself having to remember, but the political structures are not that far removed from realignment, so this shouldn’t come as too much of a surprise. Yet watching the debates, campaign subject matter, as well as how candidates speak to and of each other really pushed me to offer some reasoning from our past.

First and foremost, the issue of realignment. I feel that studying realignment is crucial to understanding how and at what rate electoral blocks in the South transitioned from Democrat to Republican. From the end of Reconstruction in the South to the Presidency of FDR, Democrats held a near monopoly at every level of political power. Yet, with some inclusion policies in government positions pushing equality introduced by FDR and Truman, the Democrat hold on the South started to splinter.

This came to a head of sorts in 1948 when Southern Democrats, called Dixiecrats, staged a walkout at the Democratic Convention to protest anti-segregationist policies that were quickly being adopted as part of the national platform. The fallout really accelerated in 1964, when LBJ signed the Civil Rights Act of 1964. As he signed it, he famously remarked, “I’ve lost the South for a generation.” As it turns out, it’s been even longer. Looking at Southern voting data from Presidential, State, and Local elections, a pattern of shifts appears quickly at the Presidential level from Democrat to Republican, and slower at State and Local levels. The deal was sealed in 1980, when Ronald Reagan visited Philadelphia, Mississippi, a location grimly known for the 1964 murders of 3 civil rights activists advocating for the rights of African Americans. Reagan delivered a message of States rights that resonated strongly with a Southern electorate that had felt disenfranchised and unrepresented from the national Democratic party. Finally, Southerners had found their party.

Understanding the transition from Democrat to Republican, now let’s take a look at understanding just how Democratic candidates were able to be elected in near monopoly conditions. In reality, the Democratic primary was the real election as there hardly was a Republican challenger that was relevant. Additionally, the South has a unique quality to it where it is more rural, and often different regions of a state had their own “candidate” that they would all advocate for, leading commonly to situations where 5 candidates would each receive roughly equivalent votes based on regional affiliation. One way to rise above the fray, as noted by renowned political scientist V.O. Key Jr in his famous work “Southern Politics in State and Nation,” was for candidates to say absurd, fear-mongering statements in order to shock people into giving their support. In practice, making noise was more important than substance in order to stand out in a primary field featuring a multitude of candidates.

Why does this matter? I can’t help but feel that the politics and methods of old Southern Democrats, whom have since realigned to the Republican party, are rearing their somewhat ugly head once again. Looking at the 2016 candidates, you have many who come from different parts of the South (such as Cruz, Jindal, Bush and Huckabee among others), and a field that hasn’t accomplished a lot of separation. Additionally, and perhaps because of this, the campaign narratives and debates have often featured personal attacks between candidates as well as fear-mongering / demonization of a group of people in order to advance a candidates stature. We’ve seen the demonization of the religion of Islam, Muslim people, Mexican immigrants, as well as the propagation of myths such as Sharia-law based “no-go” zones emerging across the United States. We’ve also seen personal attacks between candidates on personal history and appearance. Thus, while many are quick to chalk up this madness as an anomaly election, I can’t help but offer the opinion that this is the return of politics that are well rooted in some of the darkest days of our democracy.

* This piece is entirely opinion based upon the conclusions I’ve drawn from different books, essays, and papers I’ve read regarding the nature of Southern Politics. Here is some of that material that immediately comes to mind: